-

Notifications

You must be signed in to change notification settings - Fork 51

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Graph linearisation #187

Labels

Comments

This was referenced Apr 30, 2020

11 tasks

MadLittleMods

added a commit

to matrix-org/synapse

that referenced

this issue

Feb 7, 2022

…vers (MSC2716) (#11114) Fix #11091 Fix #10764 (side-stepping the issue because we no longer have to deal with `fake_prev_event_id`) 1. Made the `/backfill` response return messages in `(depth, stream_ordering)` order (previously only sorted by `depth`) - Technically, it shouldn't really matter how `/backfill` returns things but I'm just trying to make the `stream_ordering` a little more consistent from the origin to the remote homeservers in order to get the order of messages from `/messages` consistent ([sorted by `(topological_ordering, stream_ordering)`](https://github.com/matrix-org/synapse/blob/develop/docs/development/room-dag-concepts.md#depth-and-stream-ordering)). - Even now that we return backfilled messages in order, it still doesn't guarantee the same `stream_ordering` (and more importantly the [`/messages` order](https://github.com/matrix-org/synapse/blob/develop/docs/development/room-dag-concepts.md#depth-and-stream-ordering)) on the other server. For example, if a room has a bunch of history imported and someone visits a permalink to a historical message back in time, their homeserver will skip over the historical messages in between and insert the permalink as the next message in the `stream_order` and totally throw off the sort. - This will be even more the case when we add the [MSC3030 jump to date API endpoint](matrix-org/matrix-spec-proposals#3030) so the static archives can navigate and jump to a certain date. - We're solving this in the future by switching to [online topological ordering](matrix-org/gomatrixserverlib#187) and [chunking](#3785) which by its nature will apply retroactively to fix any inconsistencies introduced by people permalinking 2. As we're navigating `prev_events` to return in `/backfill`, we order by `depth` first (newest -> oldest) and now also tie-break based on the `stream_ordering` (newest -> oldest). This is technically important because MSC2716 inserts a bunch of historical messages at the same `depth` so it's best to be prescriptive about which ones we should process first. In reality, I think the code already looped over the historical messages as expected because the database is already in order. 3. Making the historical state chain and historical event chain float on their own by having no `prev_events` instead of a fake `prev_event` which caused backfill to get clogged with an unresolvable event. Fixes #11091 and #10764 4. We no longer find connected insertion events by finding a potential `prev_event` connection to the current event we're iterating over. We now solely rely on marker events which when processed, add the insertion event as an extremity and the federating homeserver can ask about it when time calls. - Related discussion, #11114 (comment) Before | After --- | ---  |  #### Why aren't we sorting topologically when receiving backfill events? > The main reason we're going to opt to not sort topologically when receiving backfill events is because it's probably best to do whatever is easiest to make it just work. People will probably have opinions once they look at [MSC2716](matrix-org/matrix-spec-proposals#2716) which could change whatever implementation anyway. > > As mentioned, ideally we would do this but code necessary to make the fake edges but it gets confusing and gives an impression of “just whyyyy” (feels icky). This problem also dissolves with online topological ordering. > > -- #11114 (comment) See #11114 (comment) for the technical difficulties

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

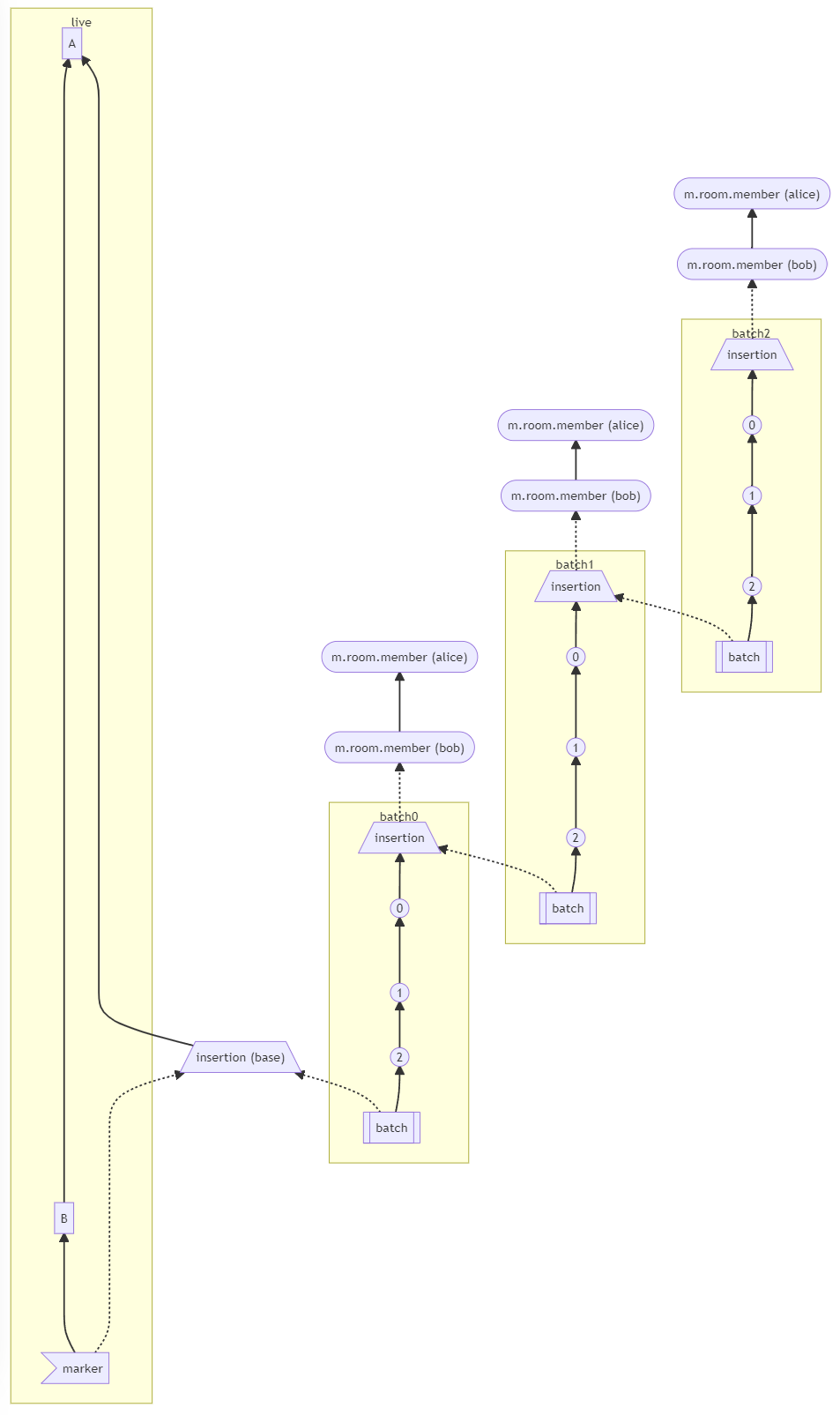

Problem context

Rooms are a directed acyclic graph. Events contain a

depthfield which gives an approximate ordering. Thedepthcan lie e.g for the events A -> B -> C as nothing stops C sayingdepth: 11even though the depth is actually3(starting at1with A). Basically, we should not usedepthprovided by servers.However, we do. We rely on the

depthto work out topological tokens when backfilling via the CSAPI call/messages. If two or more events share the samedepthvalue, we tiebreak based on the stream position (which is just a monotonically increasing integer). This is "okay", but allows attackers to inject events into any point in room history by fiddling with thedepth. This doesn't affect state, just the timeline sent to clients.Proposed solution

We need to calculate

depthfor every event we receive based onprev_events. We may also need to retrospectively update the depth of events when we get told about new events.The naive solution would be to recalculate the depth for every single event when a new event arrives. This takes

θ(n+m)time per edge where n=nodes and m=edges. To insert m edges takesθ(m^2)time. There are algorithms which perform much better than this, notably Katriel–Bodlaender which:We should implement this in GMSL or even as a stand-alone library with an interface for the ordinal data structure:

We should then make a separate component in Dendrite which consumes the roomserver output log and calculates depths for events, and then sends them on (or alternatively, keep the

syncapiin charge of doing this).NB: We still have the outstanding problem of what to do if the depths change and hence the linearisation of the DAG changes.

NBB: We still need to handle chunks of DAG (e.g joined the room, got some events, leave the room for a while, then rejoin). The above algorithm would need to be applied to each chunk independently, with book-keeping for a chunk along the lines of (from @erikjohnston ):

The text was updated successfully, but these errors were encountered: